TABLE OF CONTENTS

See Virtru In Action

Sign Up for the Virtru Newsletter

This article published in The Wall Street Journal made me stop and think.

At a time when the global economy is wrestling with the incredible potential, and also the enormous privacy risks, of ChatGPT and generative AI, why aren’t more people asking themselves a simple question: Is the risk of sharing sensitive data externally with large language models similar at all to the risk of sharing sensitive data externally via email?

In my humble opinion, the answer is yes.

To that end, the purpose of this post is to explore the parallels between these two scenarios and discuss how the right tools can help mitigate risks, particularly when it comes to protecting the massive amounts of sensitive data that employees voluntarily share every day via email.

When it comes to sharing sensitive information, ChatGPT and email workflows pose similar risks, including:

To minimize the risk of sensitive data being accidentally exposed via ChatGPT or email workflows, consider the following best practices:

The risks associated with sharing sensitive information with ChatGPT are remarkably similar to those involved in sending out emails containing sensitive data. However, organizations can effectively mitigate these risks by implementing proper measures including employee training, upstream data governance, and downstream data controls.

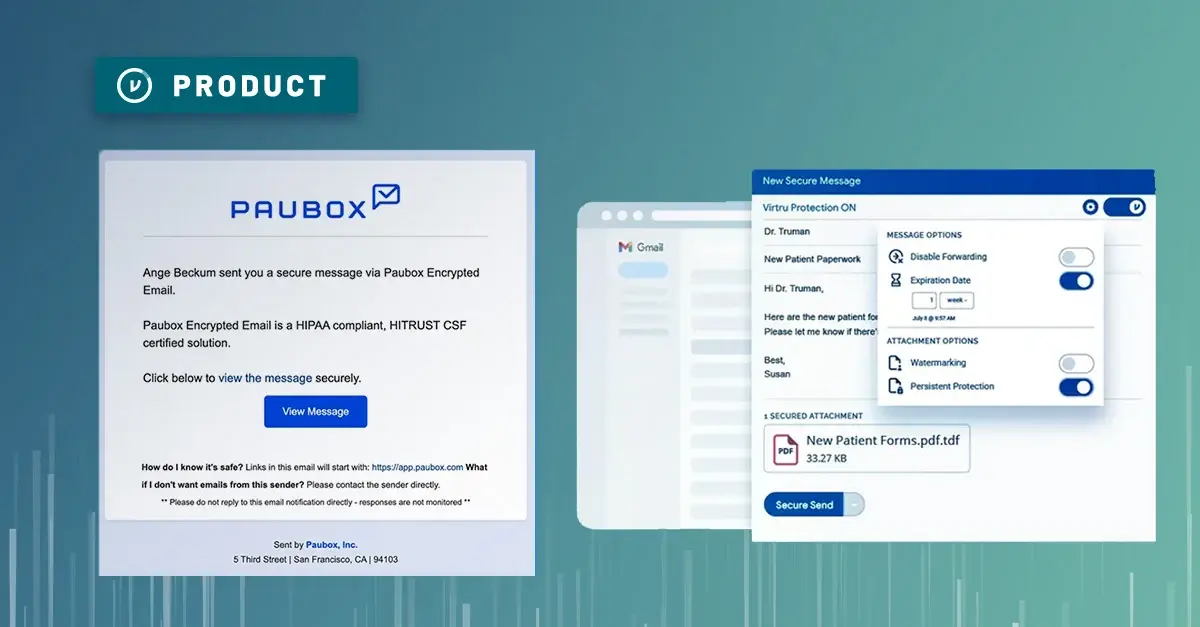

To control risks associated with downstream email workflows, consider implementing best-in-class email security tools like Virtru, which adds an extra layer of protection, ensuring that sensitive data shared via email workflows remains secure. By adopting these practices and leveraging the right tools, organizations can confidently share sensitive data externally via email and ChatGPT workflows.

A proven executive and entrepreneur with over 25 years experience developing high-growth software companies, Matt serves as Virtru’s CMO and leads all aspects of the company’s go-to-market motion within the data protection and Zero Trust security ecosystems.

View more posts by Matt HowardSee Virtru In Action

Sign Up for the Virtru Newsletter

/blog%20-%20HIO%20Maya%20HTT/Maya-HTT-Level-2-lessons%20copy.webp)

/blog%20-%20cmmc%20level%203/CMMC-LEVEL-3.webp)

/blog%20-%207%20Healthcare%20Vulnerabilities/7-hipaa-vulns.webp)

/blog%20-%20please%20delete%20that%20email.%20domain%20wide%20protection/please-delete-that-email.webp)

/blog%20-%20next%20breakfast/next-breakfast-2.png)

Contact us to learn more about our partnership opportunities.